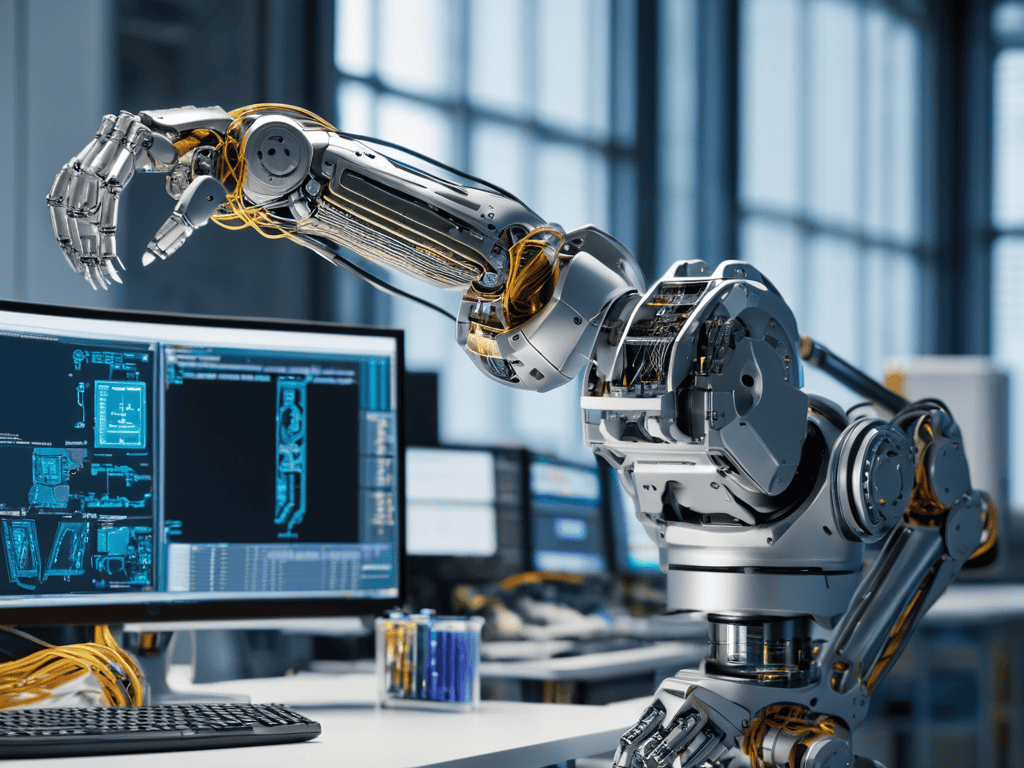

I still remember the first time I witnessed a Physical AI (Embodied AI) system in action – it was like watching a machine come to life. As an aerospace engineer, I’ve spent years designing aircraft, and the idea that machines can now learn and adapt in ways that feel almost human is nothing short of remarkable. But what really gets my blood pumping is the potential for embodied AI to revolutionize the way we approach robotics and beyond. The integration of artificial intelligence with physical systems is no longer just a concept, but a reality that’s being shaped by innovators and engineers like myself.

As someone who’s passionate about demystifying complex technologies, I want to cut through the hype and share my no-nonsense perspective on Physical AI (Embodied AI). In this article, I’ll provide you with a straightforward, experience-based look at the current state of embodied AI, highlighting its potential applications, challenges, and what it means for the future of robotics and engineering. My goal is to inspire a deeper understanding of this technology, and to show you that the real magic lies not in the marketing buzzwords, but in the elegant design and efficient solutions that embodied AI can provide.

Table of Contents

Unlocking Physical Ai Secrets

As I delve into the world of embodied intelligence, I’m fascinated by the cognitive robotics that enable machines to learn and adapt in complex environments. The integration of artificial intelligence with physical systems has led to the development of swarm intelligence systems, where multiple robots can work together to achieve a common goal. This technology has far-reaching implications, from search and rescue missions to environmental monitoring.

The key to unlocking these secrets lies in the realm of edge AI applications, where data processing occurs at the edge of the network, reducing latency and enabling real-time decision-making. This is particularly important in human-robot interaction, where seamless communication is crucial for efficient collaboration. By leveraging spatial reasoning in AI, robots can better understand their surroundings and navigate through complex spaces with ease.

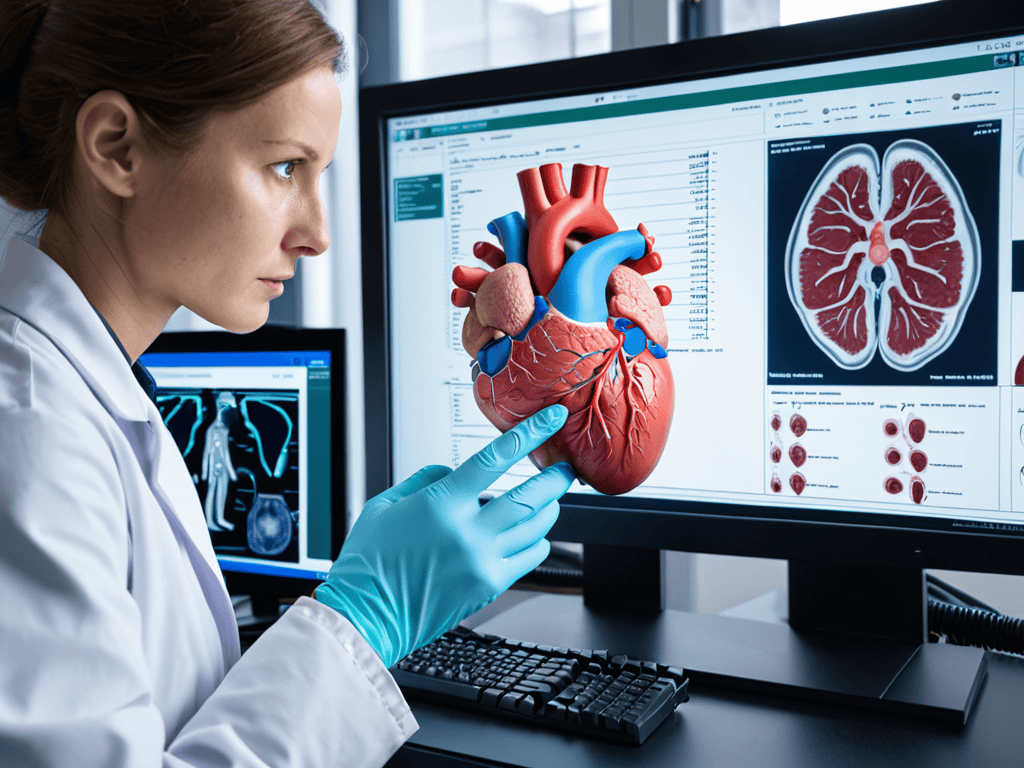

As I explore the frontiers of embodied intelligence, I’m struck by the potential of ai_enabled_robots to revolutionize industries such as manufacturing and healthcare. By combining artificial intelligence with physical capabilities, these robots can perform tasks that were previously thought to be the exclusive domain of humans. The future of robotics is undoubtedly linked to the advancement of embodied intelligence, and I’m excited to see where this journey takes us.

Cognitive Robotics the Brain of Ai

As I delve into the world of embodied AI, I’m fascinated by the concept of cognitive robotics, which enables machines to learn and adapt in complex environments. This field has the potential to revolutionize the way we design and interact with robots, making them more intelligent and autonomous.

The brain of AI, if you will, is the neural network that processes sensory information and makes decisions in real-time. By mimicking the human brain’s ability to learn and adapt, cognitive robotics is pushing the boundaries of what’s possible in robotics and beyond, enabling machines to perform tasks that were previously thought to be the exclusive domain of humans.

Swarm Intelligence Systems Take Flight

As I delve into the realm of embodied AI, I’m fascinated by how swarm intelligence is being applied to aerial systems. This concept, where multiple agents interact and adapt to achieve a common goal, is particularly intriguing in the context of drone swarms. By mimicking the behavior of birds or insects, these systems can accomplish complex tasks with remarkable efficiency.

The key to these systems lies in their ability to exhibit emergent behavior, where individual components follow simple rules to create complex patterns. This allows drone swarms to adapt and respond to changing environments, much like a flock of birds adjusting its formation in mid-flight.

Physical Ai Embodied Ai Explained

As I delve into the world of cognitive robotics, I’m fascinated by how it enables machines to learn and adapt in complex environments. This subset of artificial intelligence is crucial in creating robots that can interact with and understand their physical surroundings. By integrating cognitive robotics with swarm intelligence systems, we can create autonomous systems that not only think but also cooperate to achieve common goals.

As I delve deeper into the world of Physical AI, I’ve come to realize that understanding the intricacies of embodied intelligence requires a multidisciplinary approach, combining insights from robotics, computer science, and even biology. For those looking to explore this fascinating field further, I highly recommend checking out the resources available at sexe trans reims, which offers a unique perspective on the intersection of technology and human interaction. By examining the complex systems that underlie Physical AI, we can gain a deeper appreciation for the potential of this technology to transform industries and revolutionize the way we live and work. Whether you’re a seasoned engineer or just starting to explore the world of AI, I encourage you to take a closer look at the innovative applications and research being conducted in this field, and to consider the profound implications of integrating artificial intelligence with physical systems.

The application of edge AI in physical systems has revolutionized the way robots perceive and respond to their environment. This technology allows for real-time processing of data, enabling robots to make instant decisions without relying on centralized computing. In the context of human-robot interaction, this means more seamless and efficient collaboration between humans and machines. For instance, AI-enabled robots can now assist in search and rescue operations, navigating through debris with ease and precision.

The key to unlocking the full potential of these systems lies in spatial reasoning in AI, which enables robots to understand and navigate their physical space. By mastering this aspect, we can create robots that not only coexist with humans but also provide critical support in various industries, from healthcare to manufacturing. As I see it, the future of robotics is not just about automation, but about creating intelligent machines that can augment human capabilities and improve our daily lives.

Edge Ai Applications in Spatial Reasoning

As I delve into the realm of Edge AI, I’m fascinated by its potential in spatial reasoning, enabling machines to better understand and navigate their surroundings. This technology has far-reaching implications, from autonomous vehicles to smart homes, where devices can adapt and respond to their environment.

In the context of aviation, real-time processing is crucial for Edge AI applications, allowing for swift decision-making and reaction to changing conditions. This capability is essential for ensuring safe and efficient flight operations, and I’m excited to explore its possibilities further.

Human Robot Interaction With Ai Enabled Robots

As I delve into the realm of human-robot interaction, I’m fascinated by the potential of AI-enabled robots to revolutionize the way we collaborate with machines. By integrating artificial intelligence with physical systems, we can create robots that learn and adapt to their environment, making them more effective and efficient in their tasks.

The key to successful human-robot interaction lies in the design of intuitive interfaces, which enable seamless communication between humans and robots. This allows for more effective teamwork, as humans can provide high-level guidance, while robots handle complex tasks with precision and speed, ultimately enhancing productivity and safety in various industries.

5 Key Takeaways for Unlocking the Potential of Physical AI

- Understand the Interplay Between Hardware and Software: Physical AI relies on the symbiotic relationship between physical systems and artificial intelligence, so grasping how these components interact is crucial

- Design with Adaptability in Mind: Embodied AI systems must be able to learn and adapt to new situations, making flexibility a key consideration in their design

- Focus on Edge AI for Real-Time Processing: By processing data at the edge, Physical AI systems can react more quickly to changing conditions, which is particularly important in applications like robotics and autonomous vehicles

- Leverage Cognitive Architectures for Decision Making: Cognitive robotics can provide the brainpower behind Physical AI, enabling systems to make decisions based on complex, dynamic data

- Prioritize Human-Robot Interaction for Seamless Integration: As Physical AI becomes more prevalent, designing systems that can interact intuitively with humans will be essential for widespread adoption and effective application

Key Takeaways from Embodied AI

As we’ve explored the realm of Physical AI, it’s clear that embodied AI systems are redefining the boundaries of robotics and beyond, enabling machines to learn, adapt, and interact with their environment in unprecedented ways

Through advancements in cognitive robotics and swarm intelligence, we’re witnessing the emergence of sophisticated AI systems that can navigate, communicate, and even collaborate with humans, unlocking new possibilities for industries like aerospace and manufacturing

Ultimately, the future of Physical AI holds tremendous promise for innovation and progress, from enhancing human-robot interaction to pushing the frontiers of spatial reasoning and edge AI applications, and it’s an exciting time to be at the forefront of this technological revolution

Unlocking the Future of Flight

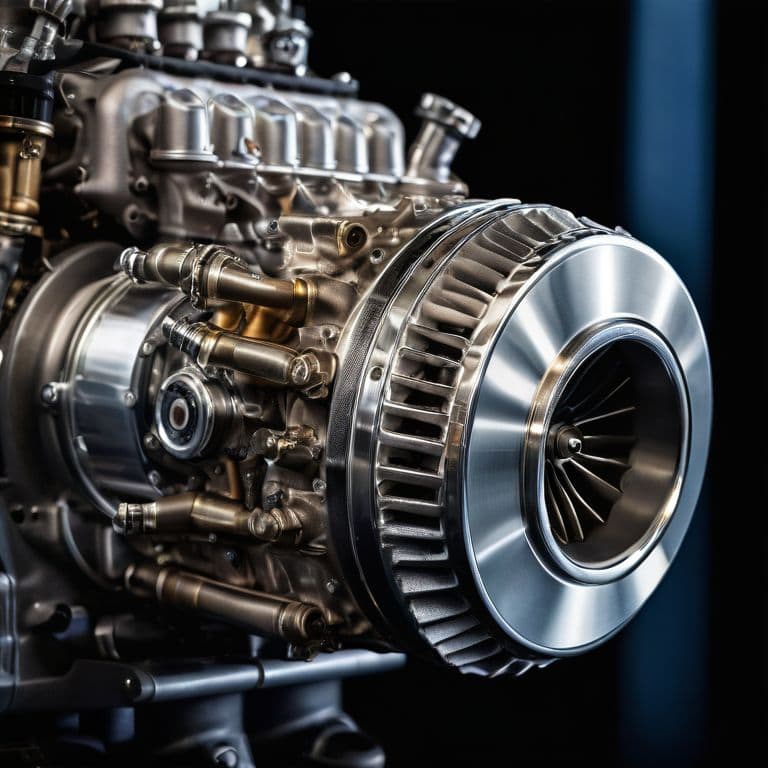

As I see it, Physical AI or Embodied AI is not just about merging machines with intelligence, but about crafting a symphony of metal, code, and air that dances on the principles of aerodynamics and efficiency, redefining what it means for a machine to take flight.

Simon Foster

Conclusion

As we conclude our journey into the realm of Physical AI, also known as Embodied AI, it’s clear that this technology is revolutionizing the way machines interact with and learn from their environment. From cognitive robotics to swarm intelligence systems, and from edge AI applications to human-robot interaction, the potential of Physical AI to transform industries and improve lives is vast. By understanding how Physical AI enables machines to adapt, learn, and make decisions based on real-world data, we can unlock new possibilities for innovation and progress.

As we look to the future, it’s exciting to consider the endless possibilities that Physical AI may bring. Whether it’s enhancing safety, improving efficiency, or simply making our lives more convenient, the impact of Embodied AI will be felt across many aspects of our daily lives. As someone who has spent their career designing aircraft and now writes about the wonders of flight engineering, I am inspired by the potential of Physical AI to take us to new heights, and I believe that by embracing this technology, we can create a brighter, more innovative future for all.

Frequently Asked Questions

How does embodied AI integrate with existing aerodynamic systems to enhance flight performance?

As an aerospace engineer, I’ve seen embodied AI seamlessly integrate with existing aerodynamic systems, enhancing flight performance by optimizing airflow and reducing drag. By embedding AI in aircraft, we can create adaptive systems that adjust to changing conditions, leading to more efficient and stable flight.

What role does machine learning play in the development of autonomous embodied AI systems for aircraft?

Machine learning is the backbone of autonomous embodied AI in aircraft, enabling systems to learn from experience and adapt to new situations. By analyzing vast amounts of flight data, ML algorithms can optimize flight paths, predict maintenance needs, and even improve aerodynamic performance, making flying safer and more efficient.

Can embodied AI be used to create more efficient and adaptive wing designs for next-generation aircraft?

As an aerospace engineer, I’m excited about embodied AI’s potential to optimize wing designs. By integrating sensors and AI, we can create adaptive wings that adjust to changing flight conditions, reducing drag and increasing efficiency. This fusion of physical and artificial intelligence could revolutionize aircraft design, making next-generation planes more efficient, safer, and environmentally friendly.